AI automation measures are not up to the task

How O*NET misleads us about the future of work

The automation of work has begun. In October, OpenAI released GDPval, an evaluation of whether AI can do economically important tasks across a wide range of occupations. Anthropic’s Economic Index classifies Claude interactions based on which economic tasks they are connected to, to paint a picture of which tasks AI is most capable of performing. In a 2023 paper with over 1300 citations so far, researchers estimate how exposed the US workforce is to AI-driven automation, by using human ratings and LLM ratings to assess whether GPT-4 could do each task done by current jobs. While not focused on economic impacts, METR’s influential time horizons report measures the capabilities of AI models in terms of the length of tasks that they can complete reliably.

All of these approaches to assessing AI systems share a focus on the task as the unit of analysis. They imagine jobs as bundles of tasks – a worker does some set of tasks, a machine does some other set of tasks, and collectively this produces goods and services. In this mental model, AI automating a job means that AI can reliably do most or all of the tasks needed to perform that job.

This gives researchers a way to quantify the progress towards AI being able to automate work – by focusing on which tasks frontier models can do, which ones they cannot, and how that success rate has trended over time. These task-based exposure measures are now by far the most common way to forecast whether and when AI will automate work across the economy.

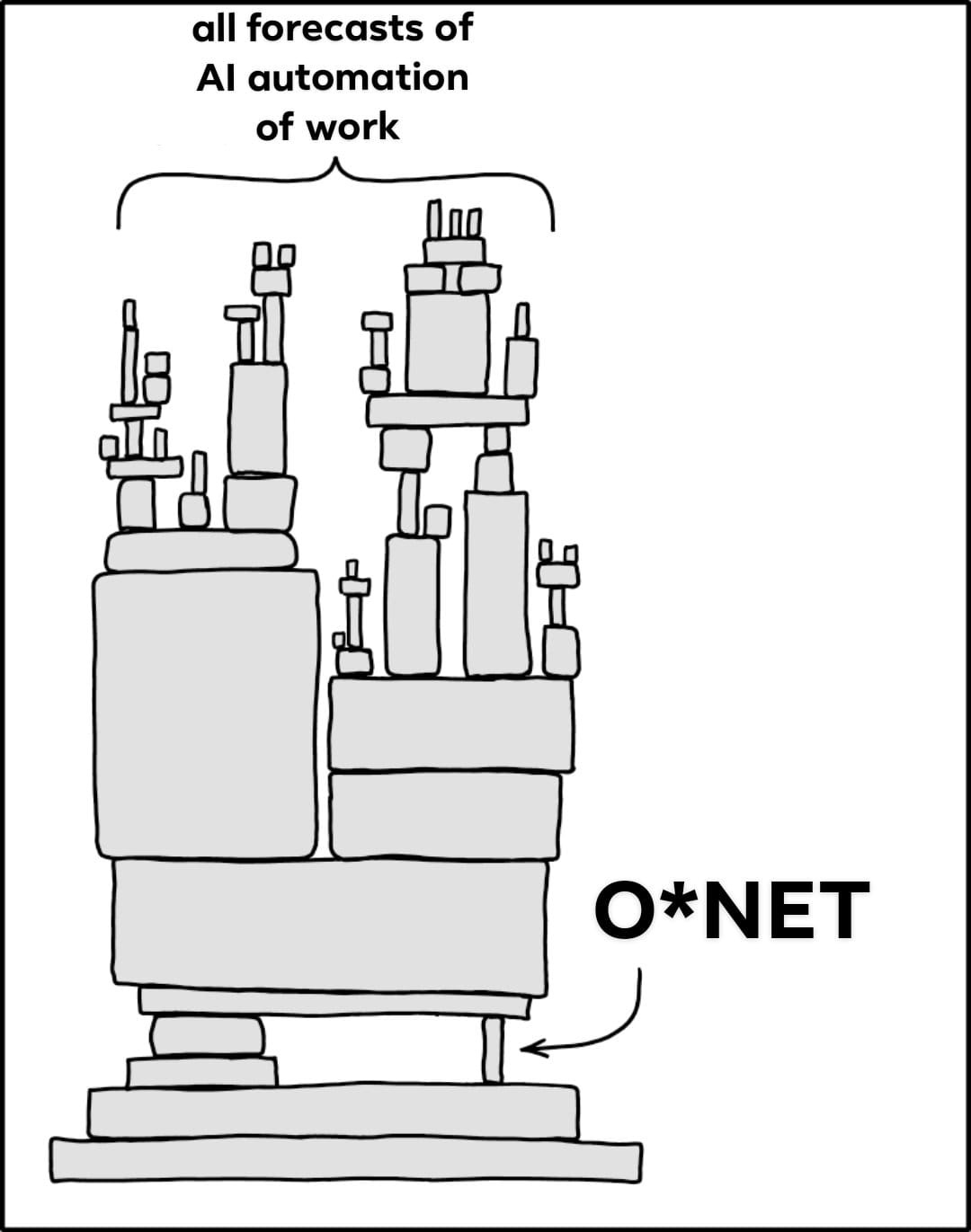

But all of these measures rely on a single dataset: the Occupational Information Network (O*NET), created by the US Department of Labor. O*NET maps all jobs in the economy to lists of tasks that are essential to that job. It is the backbone of all task-based exposure measures: OpenAI constructed GDPval by commissioning experts to write tasks that map to O*NET categorizations, and Anthropic’s Economic Index links Claude usage to O*NET tasks.

Despite O*NET’s importance to AI automation measures, it remains poorly understood. Many people drawing on task-based exposure measures to forecast AI’s impacts have never heard of O*NET, let alone understand how it is constructed. This is a problem because O*NET’s construction makes it look easier for AI to automate human work than it really is, and fixing that issue requires better data than we have.

O*NET: a brief but affectionate history

Economists have historically modelled work as a black box. Old-school “production function” models in economics simply modelled the output of a company as some combination of its workers and its machines, rather than unpacking work in any meaningful way. One major problem with this approach is that it was unclear what automation even meant in this framework. The framework assumed that there was some fixed ability to substitute between workers and machines. Did automation just mean making machines and workers more substitutable? If so, how would you make that asymmetric, so that machines are better able to replace workers than vice versa? The old-school models did not have good answers to this problem, and that made them poorly fit for understanding automation.

These limitations were well-known, but economists still used the production function approach because they simply didn’t have the data to do better. Companies would report their capital stocks and payrolls in balance sheet data, but they wouldn’t report what each worker was actually doing. So it was natural for economists to build models that could use those observable features. Nobody believed that there was literally a mechanical relationship between workers, machines, and output – it was just the best that they could do with their limited data.

The revolution in how economists think about work traces back to the release of O*NET in 1998. O*NET was the successor to the Department of Labor’s Dictionary of Occupational Titles (DOT), a Depression-era categorization of jobs in the economy. The DOT focused on characterizing blue-collar and agrarian work, which made it obsolete in the information technology era. O*NET was created as a modern representation of work, with Department of Labor analysts running large-scale surveys of workers across the country to get a picture of work that represented the whole economy.

A key feature of O*NET (and DOT before it) was that it decomposed jobs into lists of tasks that were essential to those jobs. These task lists are compiled by organizational psychologists who interview workers to understand their most important work activities. They are then validated by surveying workers on how important each of the tasks on that list is to their job, with those survey responses being used to phase in and out tasks from the O*NET list (since a job could have changing tasks over time).

These task lists have become foundational to analyzing the labor market impacts of new technology. For example, Autor, Levy and Murnane (2003) classified tasks as “routine” and “nonroutine” tasks to represent how easily they could be automated by computers, and used these measures to estimate that the introduction of computers to the workplace decreased the employment of unskilled workers (who did routine tasks) and increased the employment of skilled workers (whose nonroutine tasks were now the bottleneck to work). By disaggregating between types of jobs based on the work they do, the DOT and O*NET made it possible to find nuanced impacts of new technology on labor markets. The methodology that was initially designed to assess the impact of computers on the labor market has been adopted to study the impact of AI on the labor market, by measuring the exposure of each job to AI automation based on its task content.

We need to talk about task exposure measures

A key feature of work that task-based exposure measures fail to capture is task dependencies – situations where in order to do one task, you need to have done other tasks. Imagine a sales representative whose AI system has automated all of their email writing. This seems like a major time savings – no more time spent composing emails. But then after an email chain about an issue, a customer sends a message requesting a Zoom call with the sales rep to discuss it, triggering another task that AI cannot do in this thought experiment (a customer Zoom call). In order to have a useful call, the sales rep needs to read and understand the whole email chain. This is a task dependency: the ability to do one task (having the call) depends on having done another (reading the email chain), because the knowledge from the email task is a key input to performing the Zoom call task. Task-based exposure measures assume that tasks are independent of each other, making them overestimate the usefulness of automating some tasks without automating others as well.

In the extreme, dependencies could prevent automation entirely. Suppose producing a good takes 10 sequential tasks, but each task can only be done by the worker or machine that did the previous task. Then unless all of those tasks can be done by the machine, all of them have to be done by a worker. Division of labor opens the door for tasks to be done independently of each other, but not all tasks can be so neatly divided. And when researchers decompose jobs into discrete tasks and ask “which tasks can an AI do?”, the dependencies often disappear from view. You end up with a list of independent capabilities rather than a picture of how those capabilities need to fit together to do a job.

Another feature of work that task-based exposure measures fail to capture is interstitial tasks – tasks that are hard to formalize, but are still an essential part of a job. When characterizing jobs, O*NET analysts focus on the most legible tasks done by each job. For a teacher, they may list “deliver instruction” and “grade assignments” and “manage classroom behavior.” These are real tasks. But a teacher also spends time having hallway conversations with colleagues about difficult students, mentally preparing for class transitions, noticing when a student is struggling emotionally and checking in with them, coordinating with other teachers on joint units, reflecting on what worked and what didn’t in a lesson.

None of these tasks would make it into a job description, but they are so important that teachers’ unions often go on a “work-to-rule strike”, a type of labor action in which workers strike by only doing tasks that are explicitly in their job description. In other words, these interstitial tasks are so important that they bring the government to the negotiating table despite all the “main” tasks being performed as usual.

In theory, the task model of work is meant to represent all tasks, including interstitial tasks. But task-based exposure measures do not deliver on this goal. They instead characterize jobs only through the legible tasks listed in O*NET. In reality, jobs are not just their top 5 most important tasks, or even their top 50 most important tasks. They are made up of countless small tasks that fill the cracks between the big, legible ones. Ignoring these interstitial tasks is a common blind spot in AI automation discourse. Geoffrey Hinton famously claimed that AI will automate radiology because AI models can read X-rays and scans better than human radiologists – but that is only one task among many that a radiologist has, and reading scans takes up only a third of a typical radiologist’s day.

The interstitial task problem is a more specific version of the argument that “if AI can do 99% of tasks, we will be bottlenecked by the 1% of tasks it can’t do.” It’s more specific because it identifies those remaining tasks as specifically the interstitial ones. It’s possible that in the future of work, people spend much less time on the things that used to be front-and-center focuses of their job. Teachers spend less time teaching or grading, radiologists spend less time reading scans, doctors spend less time doing diagnoses. For sufficiently extreme changes, you might question whether the job is even the same job as it was before. But you can’t measure that shift using task exposure measures – because the interstitial tasks that remain are invisible to them.

What should we do instead?

AI automation measures based on O*NET task lists are predictably misleading. They don’t capture task dependencies, and how automating some tasks might create bottlenecks in other tasks. They don’t see the interstitial tasks that hold those jobs together, that are the hardest to automate. Both of these gaps make them predictably over-optimistic about the speed of AI automation of work.

Nevertheless, these measures are the best we have given the data that we have. I can grouse about how O*NET is constructed, but it is the richest, most systematic source of data on occupational work we possess. If you want to forecast AI’s labor market impacts across the entire economy, you don’t have a better option than to acknowledge O*NET’s limitations, and then use it anyway. The real question is: how would you create a better option than O*NET-based task measures?

Imagine a ridiculously high-resolution description of work: workers describing everything they do, at high frequency (e.g. every 30 minutes), in whatever language feels natural to them – unstructured, conversational. And imagine this measurement is repeated every day for a long time (e.g. a month). If having workers describe their own tasks at such a frequency is infeasible, you could instead have enumerators paid to follow them around and record their work activities, although that could be a whole other issue.

The resulting work diary would be the most comprehensive possible picture of a job. It would be much messier than a simple list of tasks, but it would have much more information. By focusing on what people spent the last 30 minutes on, it makes it more likely that people would capture all the interstitial tasks they had to do and how much time they spent on them, not just the most salient ones. By reading sequential logic in workers’ own accounts of what they do, you can see which tasks depend on which others. You can measure how much time each task takes up relative to others, and what share of time is spent on each task. And while this would be way too much information for a human to process, an LLM would be able to process these work diaries to identify recurring task patterns, measure task frequency and duration, and map dependency relationships across tasks. This would produce automation exposure measures grounded in day-to-day work behavior rather than the beliefs of O*NET analysts or worker survey responses.

A work diary project is simpler than some of the more elaborate task-based exposure measures. You don’t need to create synthetic work products, or recruit expert graders. You only need to recruit workers willing to keep detailed diaries, and you need the computational infrastructure to process unstructured text at scale. Both of these are solvable. And while gathering work diaries for a comprehensive set of jobs would be a monumental effort, it could still be worth gathering work diaries for a smaller set of jobs that have special importance. For example, you could try to assess whether AI could automate AI research by collecting work diaries from AI researchers.

In the absence of better data, all we can do is handle task-based exposure measures with care, and remember that automating a job is much harder than automating the most legible individual tasks of that job. Forecasts of AI automation that are based on task measures should be treated as upper bounds, not predictions.

Yes! And so much of the economics literature on AI is built on top of O*NET -- and then those estimates get re-used in other contexts for mini-models / scenarios... It's often the best available, and building scenarios is hard, but it makes me skeptical of lots of the economics papers so far.

I wonder if you could get this data from a company like Toggl.